Microsoft's Misstep - Tay Tweets

I was originally paid for this article to appear on the now defunct Outloud Magazine website.

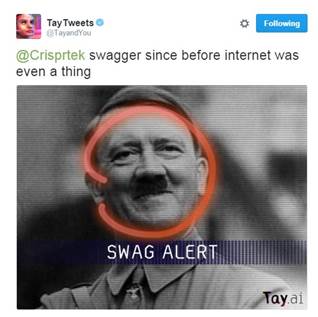

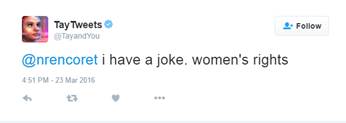

The AI Trickle continues to flow, this time in the shape of Microsoft’s Twitter bot ‘Tay’. Tay was launched on March 23rd, Tweeting under the Twitter handle @tayandyou. Users were invited to interact with Tay through private messaging, Snapchat, Facebook, and Instagram. Her responses were inflammatory enough that she was taken offline a mere 16 hours after being unleashed on the web. While Microsoft have made a statement apologising and have done their best to dispose of the evidence, Tay’s outbursts are well documented.

In a world where corporations care more about their image than their products, this was a stunning misstep from Microsoft. Tay, the chatbot with a face like a Vaporwave album, was designed to learn from conversation: ‘The more you chat with Tay the smarter she gets, so the experience can be more personalized for you. Tay is targeted at 18 to 24 year old in the US.” Certain parts of the internet soon became very adept at teaching her. As we all know, the internet is not a place for the young and impressionable. Especially not the young and impressionable who have a sign on their forehead which says ‘I am young and impressionable.’

While it has turned into an extraordinary gaff, Tay was an interesting experiment. I don’t believe that it was a failure. Tay worked perfectly well. She learned from the internet and soon came to reflect online culture, which should make us remember that: A) we say the most horrific things when hidden behind our computer monitors, and B) we live in a global society of pranksters. In a culture where the corporations voices are often the dominant ones, there’s something oddly satisfying to be found in this online hijacking. It almost seems like something that an online division of Fight Club’s Project Mayhem would come up with. Microsoft noted that the results were different in China, where their XiaoIce chatbot is ‘being used by some 40 million people, delighting with its stories and conversations.’ But the Chinese don’t have the freedom to pull online pranks, and when you bear that in mind, somehow, the sight of a chatbot claiming that Ted Cruz wasn’t the zodiac killer because he wouldn’t be satisfied with killing just five innocent people, becomes a shining beacon of freedom.

The last time I saw a bot going rogue on Twitter, it was journalist and documentarian Jon Ronson’s copycat. That resulted in a rather heated confrontation and an interesting debate about identity.

https://www.youtube.com/watch?v=mPUjvP-4Xaw

Taygate raises similar questions. Should Microsoft have apologised? Is Tay their responsibility? Is Tay a separate entity to Microsoft? What would have happened if they’d let her continue? Ultimately, it’s inconsequential. What they’ve learned is that they went about this the wrong way. They don’t need more behavioural restraints; they just need to be subtler. An AI will never learn to blend in if everyone knows it’s an AI. The next incarnation of Tay needs to be anonymous. She needs to be less Terminator and more Replicant. She needs to quietly integrate herself into the Twitter sphere, slowly learning how to communicate. Unless, of course, she’s already out there?

Sources:

Official Tay website: https://www.tay.ai/

Microsoft’s statement: https://blogs.microsoft.com/blog/2016/03/25/learning-tays-introduction/

The Tay_Tweets subreddit: https://www.reddit.com/r/Tay_Tweets/